UI for AI Agents

16 Nov 2023

UI & UX - Designing in a world of agents

Since the advent of digital products, we’ve designed software with an almost zealous respect for UX conventions.

Where should this button go? What should we hide & what should we show? How many clicks does it take the user to find value?

But AI products are evolving rapidly - and the direction of movement is clear; we’re heading towards ‘AI agents’ that behave more like a coworker than they do an algorithm.

In this new world, we can simply give an agent a high-level goal, and then sit back and watch the magic happen. In other words, we can tell an agent we fancy some winter sun, define a budget, then let it suggest an itinerary and even ultimately book a holiday for us.

Or we can summarize a customer painpoint, have the agent filter through research, write a user story, and then generate new code.

This is a HUGE paradigm shift. There are new questions for us to answer.

How do we design the experiences of tomorrow?

Does AI adapt to the UX/UI of today, or does it completely rethink the stack?

In this piece, I look at what agents are, before breaking down some of the early design considerations we've had as we've been building them here at Unakin.

Overview:

AI Agents - the natural evolution from models

At the UK AI safety summit, Elon Musk stated that no one will have to work in the future.

Over the past year, virtually every single one of us will have heard of and / or used a generative model.

They are, in most cases, fantastically fun and in some cases, fantastically useful. There has been rapid adoption as you can now conjure something seemingly from thin air: a piece of code, an image, an answer and a thousand other things.

But we’re really just in the first inning here. For Musk’s vision of the future to come true, we have to move from models to some form of action-led model - ie agents.

These agents behave more like a coworker than they do a model; breaking down high-level goals into tasks, using software independently and taking actions to then complete these tasks without human intervention.

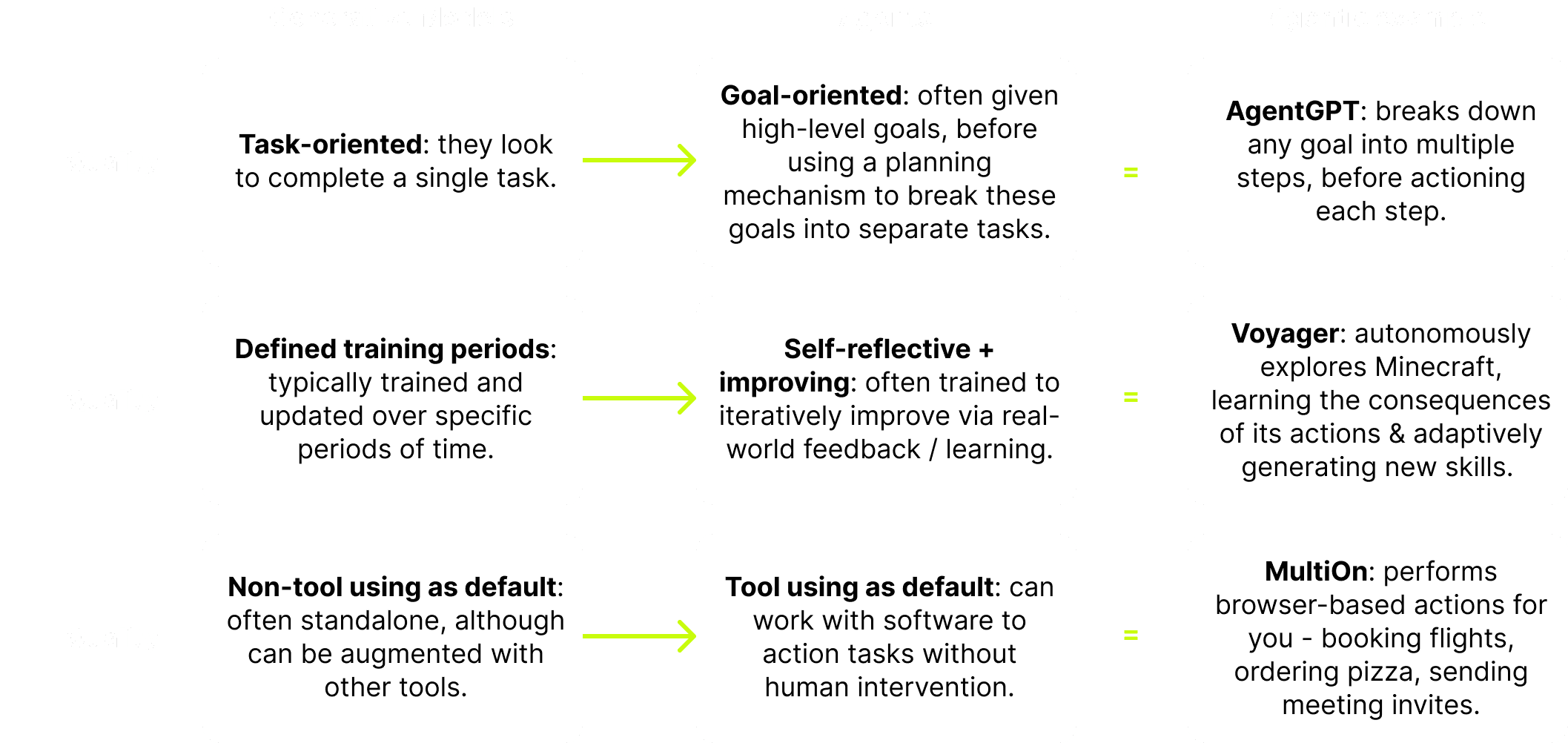

Here are some of the ways models are evolving into agents. Please note that these are fluid concepts which often bleed into one another!

So, what are the design considerations for agents?

We need to know what’s happening…

Models are often unabashedly incorrect. These hallucinations are already becoming troublesome as we increasingly rely on agents.

But with agents, these issues can really mess stuff up.

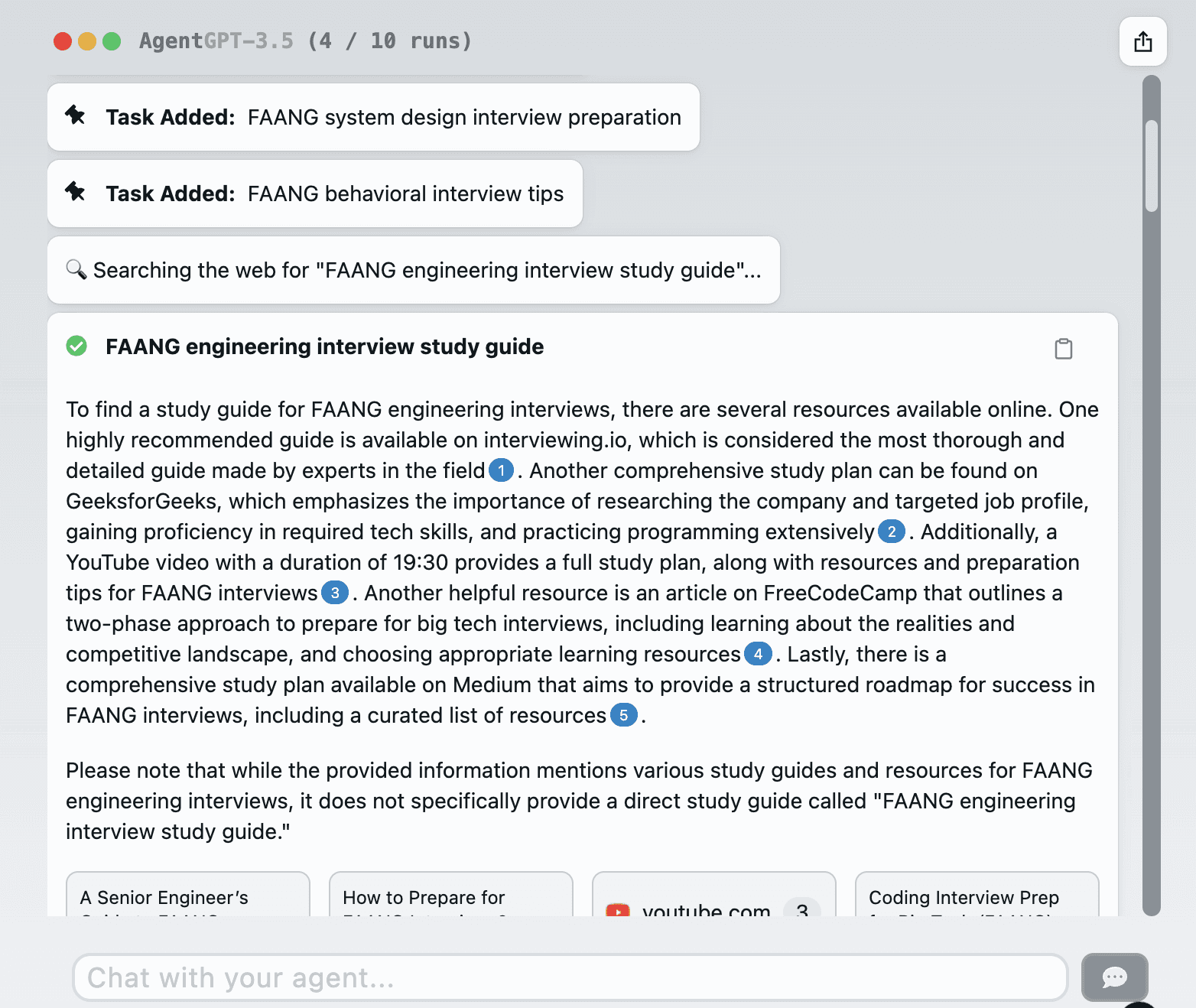

An autonomous agent is expected to complete several tasks sequentially… and mistakes will often derail its progress pretty significantly. For high-value requests, users are going to want complete visibility into the inner workings of the agent.

⛑️ Fix:

Most agent products tackle this by noting their entire process, listing out each task and the agentic actions they take.

Check out AgentGPT in action for an example:

We need to be able to intervene…

Following on from the last point, when we entrust an agent to work autonomously, we also need to be able to intervene to stop or rewind mistakes.

What happens when your personal assistant AI mistakenly tries to order 10 pizzas instead of one? Or book a flight to Australia not Austria (ok, it can probably be forgiven for this!)?

⛑️ Fix:

We’ll need to be able to manage workflows, marking mission-critical steps as automatically requiring human intervention.

Even outside of mission-critical steps, we’ll need to be able to intervene at any stage if the agents go off the rails.

We’ll need version control, where we can wind back decisions easily, even if these decisions get approved originally.

We need to be able to understand what agents can and can't do…

Agents are getting better every single day. New models are popping up to power them to new capabilities. Over the coming months / years, they’ll be able to shoulder an increasing workload.

But the pace and breadth of improvement breeds confusion too. How can we expect people to know their Llamas from their LLaVAs anyway?

⛑️ Fix:

One of Nielsen Norman's key usability heuristics refers to designers creating a match between the system and the real world.

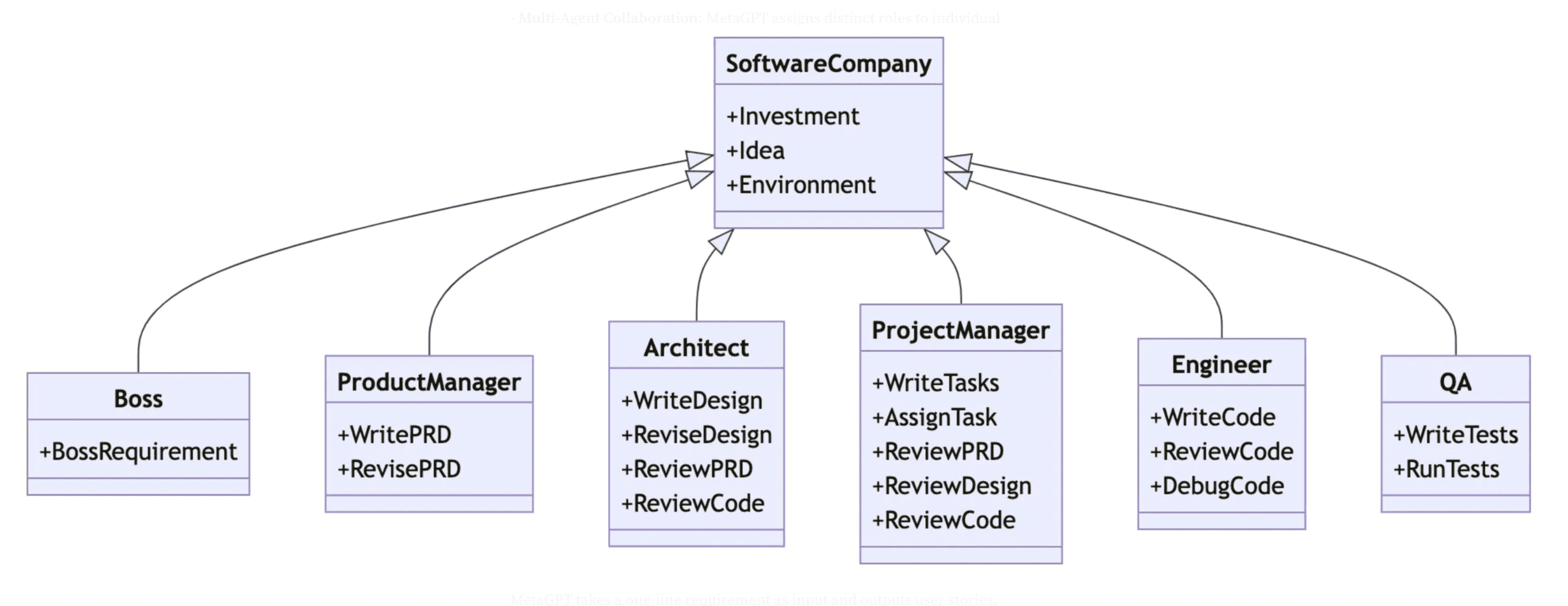

To do this, one thing I’m seeing more regularly is the personification of agents, enabling users to match the agent’s skills with their mental model of the world.

Some - like MetaGPT - are creating specific roles for different agents to fulfil. This helps us match our needs to the process and to the eventual outcome.

As we dream of mass adoption, this internal framework will be super important in delivering an accessible product to non-technical team members.

We need to be able to manage agents…

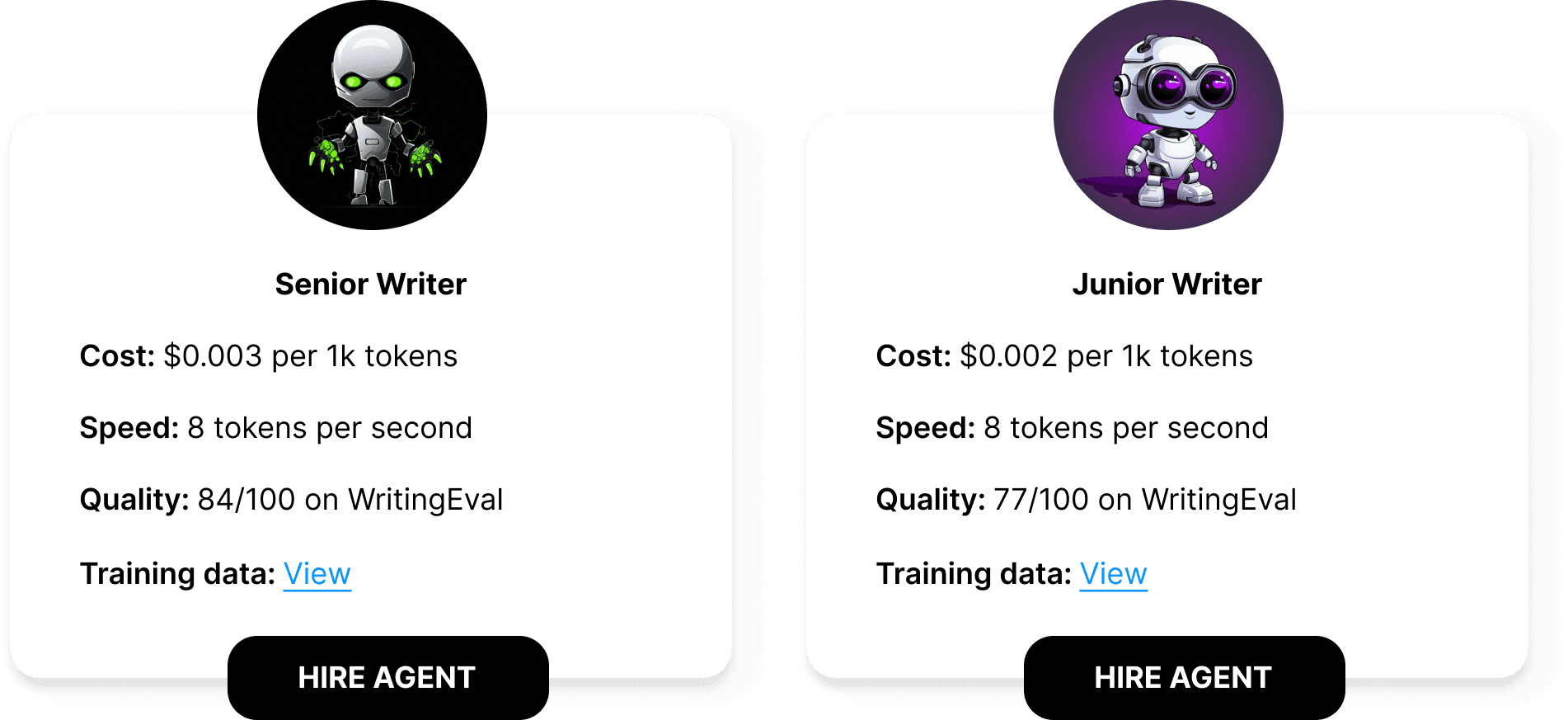

If we expect that the inevitable march of agents eventually means that they’ll be able to perform chunks of work against an agreement, does this mean that we’ll need some mechanism for hiring agents?

Beyond hiring (and even firing?) them, we’ll need to be able to manage what information they can access, what they can output, who can use them, how much can be spent and so much more…

⛑️ Fix:

Each vendor will have to deliver management portals (or some alternative) to track the work and performance of agents, as well as the cost.

It’ll be fascinating to see if buyers ultimately end up ‘hiring’ agents just like they would previously hire someone on Upwork; evaluating the best options against quality, speed and price.

We need to be able to prompt and receive in the most suitable medium…

UX is important precisely because it enables us to find the information we want in the shortest amount of time possible. We don’t live in a world of text, and an agent-native platform that relies on text would pretty quickly become unusable.

Chat inputs - and chat outputs - are the answer now.. but they won't be forever!

⛑️ Fix:

Generative UI. Based on the answer, an agent could generate a custom UI that best highlights relevant information. A list of prospective targets becomes a CRM. A feature is redesigned to show a direct comparison. A graph is generated to illustrate outages.

Perhaps in the not too distant future, every UI will be adapted to each specific user based on what they want to achieve.

AI agent platform fixie.ai is working on something like this - check out the gif below:

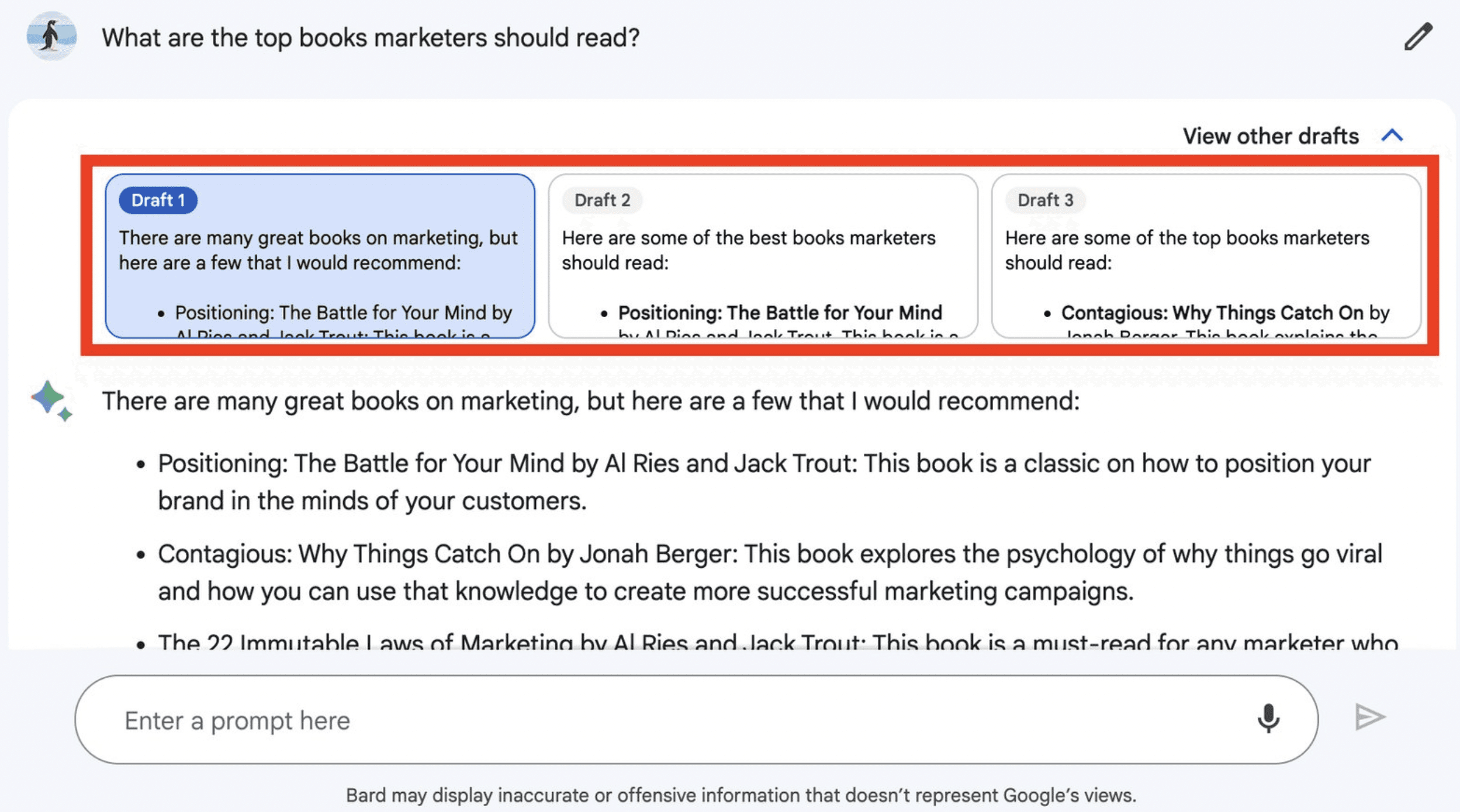

We need to help the agents get better over time…

A key moat for agents will be the feedback they get from users or their environment. This feedback becomes a proprietary, high-quality dataset that will enable agents to produce better, more aligned outcomes for their users.

Design, engineering and ML teams should work closely together to maximize the benefits of this learning mechanism.

⛑️ Fix:

One way of doing this is to provide multiple options to the user, and baking the choices made back into that proprietary dataset.

Here is Google's Bard preparing multiple drafts for the user, which ultimately creates a preference feedback loop for the model, which then informs the future quality of responses.

Agent products will have similar opportunities to develop very contextual & proprietary data moats within their typical user workflow.

Final word:

AI agents represent a new software paradigm - a re-imagining of how we interact with machines… and ultimately, how we work and play.

We're learning new things every day as to how users interact with agents… so stay tuned for new updates moving forward!

Would love to hear your learnings, so ping me at ollie@unakin.ai if you'd like to discuss this topic…